POKEMON AUGMENTED EXPERIENCE

Role: Unity Artist , Nuke Compositor

Software: Unity, Nuke

Created at We Are Royale

In late 2017, We Are Royale decided to take things beyond the usual 15 second spot and explore the possibilities of AR and VR. To venture into this brave new world, the Pokemon brand was the perfect candidate. We crafted a full digital experience based on one of the latest animated spots we had produced. That’s how Pokemon Augmented Experience app was born.

This experience was broken into two sections: AR and VR, both contained in one single app that could be downloaded from the Apple store.

Below is the original 15 sec spot that inspired this internal project.

AR EXPERIENCE

Prior to this project, I had already been playing around with and learning Unity on my own time. By the time this came along, I was ready to take on the challenge and I gladly joined the team as a Unity Artist for the AR portion.

The AR experience took full advantage of the Trading Cards. We used the cards to bring Pokemon characters to life and battle each other right in front of you! The card worked as a trigger detected by the Vuforia plugin inside Unity. We worked alongside a Unity developer who was in charge of keeping things moving smoothly behind the scenes in the app build.

My assignment was more focused on the artistic side. Since my background is in compositing + animation, this was the perfect task for me. I had to work with our Maya Animation team to bring the models and animations made in Maya into Unity and of course make them look good! That included textures, lighting, fx and arranging all the animation takes for the idle and activation states. Pokemon Idle when the Pokemon first appears on screen (card is detected). Pokemon Activation (ready to battle) if another one is detected nearby. We always tried to keep things optimized for mobile devices. It was definitely a learning experience!

Another aspect of the AR experience involved using the poster keyart to bring the characters and worlds to life. Think of it as a window into a different dimension. The poster was based off the 15 sec spot and featured Solgaleo and Lunala.

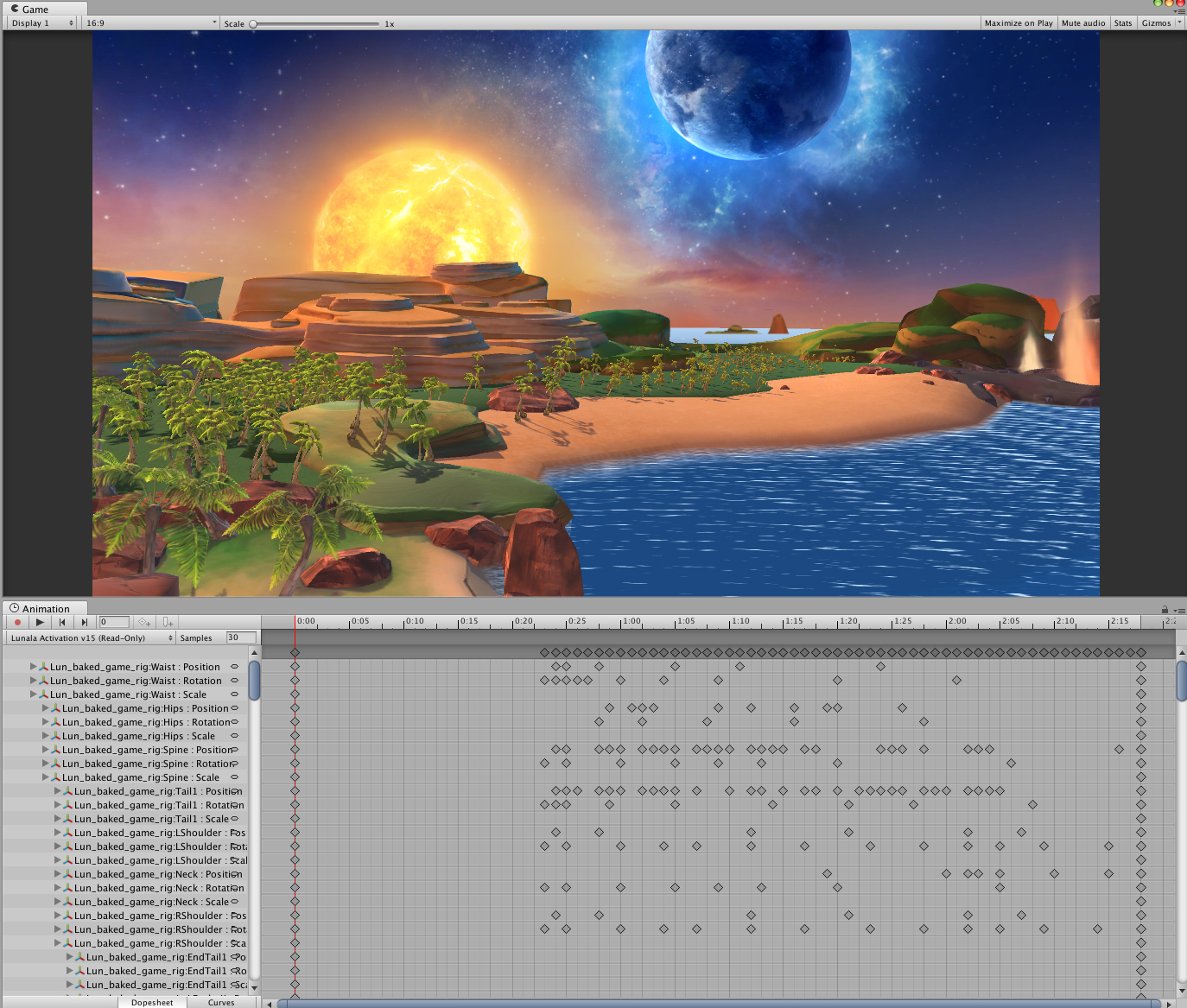

I created a few Unity scenes as proof of concept in order to prepare for the task. These scenes allowed us to determine what assets could be repurposed from the original animated spot and what would need to be optimized for the mobile app.

An early test showing how the poster artwork triggers the Lunala character. Notice the very rough environment around it. This was also used to judge the accuracy of the AR tracker.

Work in Progress capture of the AR Poster in action (2x speed). No animation yet (just an idle state loop) but it does show some texture, lighting and atmosphere work.

Close to final version. Animation for idle and active (both characters) states has been added. Transition and idle FX around poster frame are almost final. This version also shows a matte painting that was added as a background

Screen Capture of the final Unity Scene (looped). In the actual app, only a section of this was visible because of the picture frame. Since I was not inside a typical compositing package like Nuke or AE, I had to come up with ways to make the scene look pretty without slowing down performance (adding post effects).

This is how the final product looked through a mobile device. The poster would trigger the AR experience, allowing the user to view into another dimension. Even turning the camera a few degrees would reveal new parts of the world thanks to having parallax in the scene.

VR EXPERIENCE

We decided to include a 360 VR Stereo experience. The initial idea was to fully build it in Unity. The Unity player would allow us to craft a fully immersive and interactive real time experience.

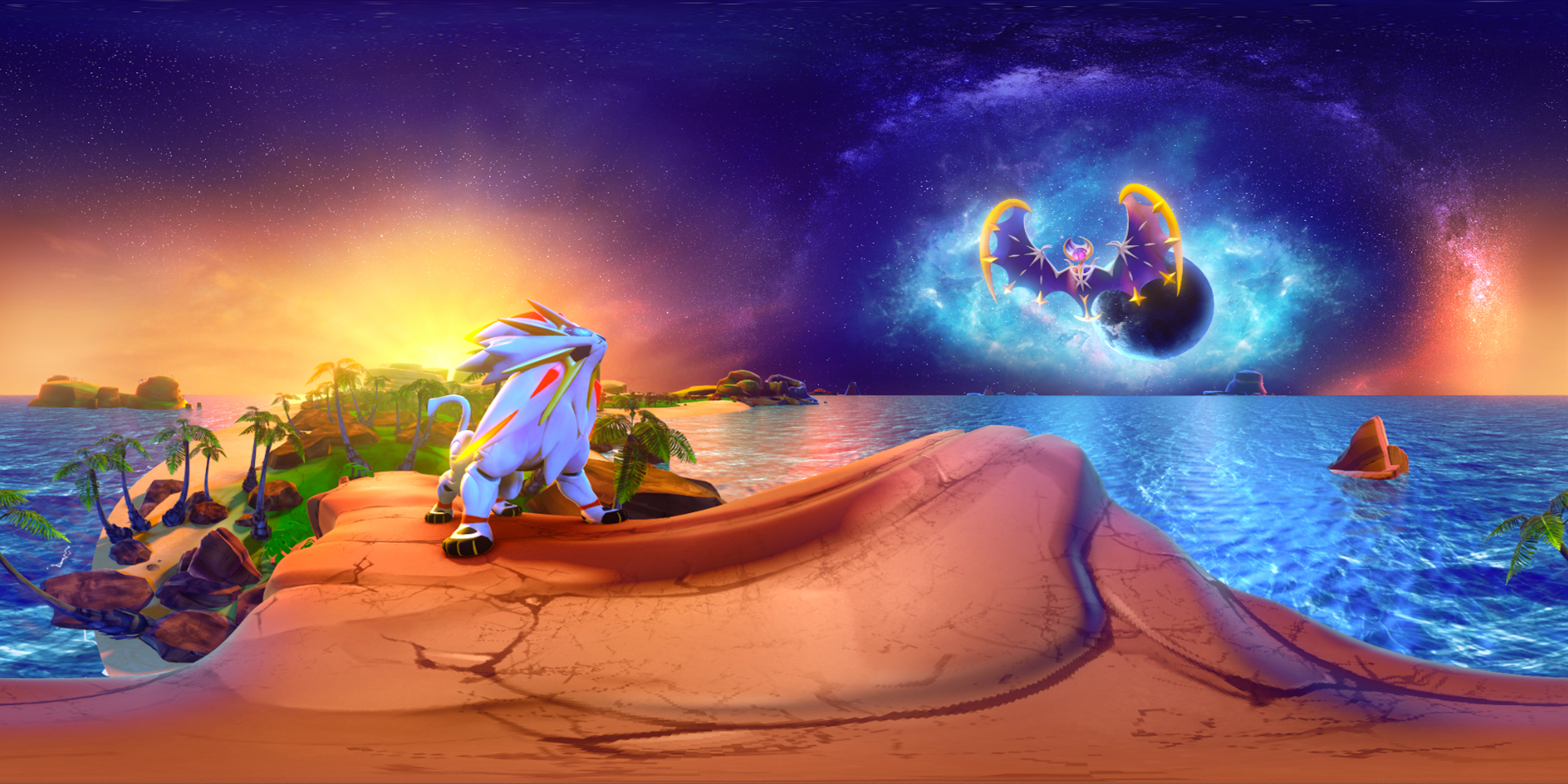

Below is a proof of concept showing a rough Pokemon scene in full 360 goodness. This was a quick screen capture of our Unity scene.

Unfortunately this test does not show the camera walking around the scene. The Unity scene was set up so the camera could walk around (or run) and collide with any objects

We decided to include a 360 VR Stereo experience. The initial idea was to fully build it in Unity. The Unity player would allow us to craft a fully immersive and interactive real time experience.

Below is a proof of concept showing a rough Pokemon scene in full 360 goodness. This was a quick screen capture of our Unity scene.

Unfortunately this test does not show the camera walking around the scene. The Unity scene was set up so the camera could walk around (or run) and collide with any objects

Due to time constrains we decided to create a prerendered 360 VR Stereo movie instead. That meant using our usual tools like Maya for 3D and Nuke for Compositing and finishing. We rendered full blown EXR sequences for both eyes (L/R) and proceed to composite this in stereo inside Nuke.

The good thing about doing a prerendered 360 movie is that we could use the techniques we would typically employ in a regular commercial pipeline. Below is the final 360 VR Stereo experience created with prerendered content. The final movie was viewable inside the app using any cardboard device.